Attention Is All You Need - Paper Explained

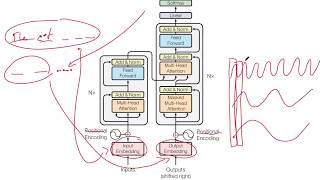

In this video, I'll try to present a comprehensive study on Ashish Vaswani and his coauthors' renowned paper, “attention is all you need”

This paper is a major turning point in deep learning research. The transformer architecture, which was introduced in this paper, is now used in a variety of stateoftheart models in natural language processing and beyond.

Chapters:

0:00 Abstract

0:39 Introduction

2:44 Model Details

3:20 Encoder

3:30 Input Embedding

5:22 Positional Encoding

11:05 SelfAttention

15:38 MultiHead Attention

17:31 Add and Layer Normalization

20:38 Feed Forward NN

23:40 Decoder

23:44 Decoder in Training and Testing Phase

27:31 Masked MultiHead Attention

30:03 Encoderdecoder SelfAttention

33:19 Results

35:37 Conclusion

Link to the paper:

https://arxiv.org/abs/1706.03762

Authors:

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin

Helpful Links:

"Vectoring Words (Word Embeddings)" by Computerphile:

• Vectoring Words (Word Embeddings) C...

"Transformer Architecture: The Positional Encoding" by Amirhossein Kazemnejad:

https://kazemnejad.com/blog/transform...

"The Illustrated Transformer" by Jay Alammar:

https://jalammar.github.io/illustrate...

Lennart Svensson's Video on Masked selfattention:

• Transformers Part 7 Decoder (2): ...

Lennart Svensson's Video on Encoderdecoder selfattention:

• Transformer Part 8 Decoder (3): E...

I'd like to express my gratitude to Dr. Nasersharif, my supervisor, for suggesting this paper to me.

♂ Find me on: https://linktr.ee/HalflingWizard

#Transformer #Attention #Deep_Learning

![The moment we stopped understanding AI [AlexNet]](https://i.ytimg.com/vi/UZDiGooFs54/mqdefault.jpg)

![[ 100k Special ] Transformers: Zero to Hero](https://i.ytimg.com/vi/rPFkX5fJdRY/mqdefault.jpg)