Entropy: Two Simple Ideas Behind Our Best Theory of Physics

To try everything Brilliant has to offer—free—for a full 30 days, visit https://brilliant.org/ParthG/. The first 200 of you will get 20% off Brilliant’s annual premium subscription.

Our most robust theory of physics so far seems to be #thermodynamics

Here are two simple assumptions behind statistical mechanics, the smallscale detailed description of thermodynamics. #statisticalmechanics #entropy

The Second Law of Thermodynamics essentially states that heat (or more precisely, thermal energy) cannot be transferred spontaneously from a colder object to a hotter object. Instead when two objects of different temperatures are brought into thermal contact, thermal energy will spontaneously flow from the hotter object to the colder one until they both reach equilibrium at some temperature between the two objects' initial temperatures. This is the Clausius description of the Law.

Statistical mechanics is the study of particles making up each system we study (such as a gas). This smallscale study allows us to make very precise predictions of how the system will behave. However, it is very difficult due to the often huge numbers of particles in each system. So instead we need to find ways to link the small scale statistical mechanics theory to the large scale thermodynamics theory. Thermodynamics is the study of heat and energy within systems on a large scale (such as an entire gas, liquid, or solid).

The first assumption of statistical mechanics is that each microstate for a given system (each possible energy arrangement of particles within the system) is equally like as all the other possible arrangements. This is the Law of Equal APriori Probabilities.

The second assumption is that each microstate corresponds to a largescale property of the system, such as volume, pressure, or temperature. It also states that we should be able to link a measured property of a system with the weighted average of individual microstate properties over the measurement period.

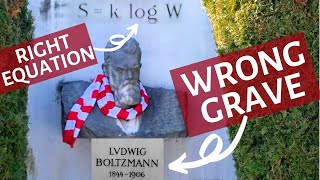

By the way, Boltzmann discovered the equation linking omega (number of microstates) to entropy, and hence the understanding of entropy as disorder. Since the entropy of the universe increases over time, it is thanks to his work that we know how the universe will (probably) end in a highly disordered state.

Thanks for watching, please do check out my links:

MERCH https://parthgsmerchstand.creator...

INSTAGRAM @parthvlogs

PATREON patreon.com/parthg

MUSIC CHANNEL Parth G's Shenanigans

Here are some affiliate links for things I use!

Quantum Physics Book I Enjoy: https://amzn.to/3sxLlgL

My Camera: https://amzn.to/2SjZzWq

ND Filter: https://amzn.to/3qoGwHk

Useful Resources

1) My Entropy Video: • Is ENTROPY Really a "Measure of Disor...

2) Detailing the Assumptions of Stat Mech: https://ocw.mit.edu/courses/3012fun...

Timestamps:

0:00 The Second Law of Thermodynamics and Entropy

3:04 Sponsor Message Check Out Brilliant.org in the Description

4:14 Microstates of a System

6:03 The First Assumption of Statistical Mechanics

7:50 The Second Assumption of Statistical Mechanics

This video was sponsored by Brilliant. #ad