Gail Weiss: Thinking Like Transformers

Paper presented by Gail Weiss to the Neural Sequence Model Theory discord on the 24th of February 2022.

Gail's references:

On Transformers and their components:

Thinking Like Transformers (Weiss et al, 2021) https://arxiv.org/abs/2106.06981 (REPL here: https://github.com/techsrl/RASP)

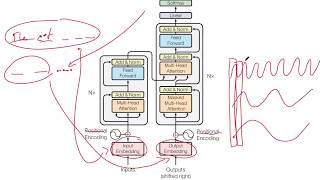

Attention is All You Need (Vaswani et al, 2017) https://arxiv.org/abs/1706.03762

BERT: Pretraining of Deep Bidirectional Transformers for Language Understanding (Devlin et al, 2018) https://arxiv.org/abs/1810.04805

Improving Language Understanding by Generative PreTraining (Radford et al, 2018) https://s3uswest2.amazonaws.com/op...

Are Transformers universal approximators of sequencetosequence functions? (Yun et al, 2019) https://arxiv.org/abs/1912.10077

Theoretical Limitations of SelfAttention in Neural Sequence Models (Hahn, 2019) https://arxiv.org/abs/1906.06755

On the Ability and Limitations of Transformers to Recognize Formal Languages (Bhattamishra et al, 2020) https://arxiv.org/abs/2009.11264

Attention is TuringComplete (Perez et al, 2021) https://jmlr.org/papers/v22/20302.html

Statistically Meaningful Approximation: a Case Study on Approximating Turing Machines with Transformers (Wei et al, 2021) https://arxiv.org/abs/2107.13163

Multilayer feedforward networks are universal approximators (Hornik et al, 1989) https://www.cs.cmu.edu/~epxing/Class/...

Deep Residual Learning for Image Recognition (He at al, 2016) https://www.cvfoundation.org/openacc...

Universal Transformers (Dehghani et al, 2018) https://arxiv.org/abs/1807.03819

Improving Transformer Models by Reordering their Sublayers (Press et al, 2019) https://arxiv.org/abs/1911.03864

On RNNs:

Explaining Black Boxes on Sequential Data using Weighted Automata (Ayache et al, 2018) https://arxiv.org/abs/1810.05741

Extraction of rules from discretetime recurrent neural networks (Omlin and Giles, 1996) https://www.semanticscholar.org/paper...

Extracting Automata from Recurrent Neural Networks Using Queries and Counterexamples (Weiss et al, 2017) https://arxiv.org/abs/1711.09576

Connecting Weighted Automata and Recurrent Neural Networks through Spectral Learning (Rabusseau et al, 2018) https://arxiv.org/abs/1807.01406

On the Practical Computational Power of Finite Precision RNNs for Language Recognition (Weiss et al, 2018) https://aclanthology.org/P182117/

Sequential Neural Networks as Automata (Merrill, 2019) https://aclanthology.org/W193901.pdf

A Formal Hierarchy of RNN Architectures (Merrill et al, 2020) https://aclanthology.org/2020.aclmai...

Inferring Algorithmic Patterns with StackAugmented Recurrent Nets (Joulin and Mikolov, 2015) https://proceedings.neurips.cc/paper/...

Learning to Transduce with Unbounded Memory (Grefenstette et al, 2015) https://proceedings.neurips.cc/paper/...

Paper mentioned in discussion at the end:

Attention is Not All You Need: Pure Attention Loses Rank Doubly Exponentially with Depth (Dong et al, 2021) https://icml.cc/virtual/2021/oral/9822

![The moment we stopped understanding AI [AlexNet]](https://i.ytimg.com/vi/UZDiGooFs54/mqdefault.jpg)