Free YouTube views likes and subscribers? Easily!

Low-rank Adaption of Large Language Models: Explaining the Key Concepts Behind LoRA

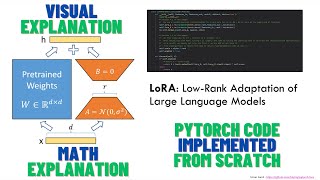

In this video, I go over how LoRA works and why it's crucial for affordable Transformer finetuning.

LoRA learns lowrank matrix decompositions to slash the costs of training huge language models. It adapts only lowrank factors instead of entire weight matrices, achieving major memory and performance wins.

LoRA Paper: https://arxiv.org/pdf/2106.09685.pdf

Intrinsic Dimensionality Paper: https://arxiv.org/abs/2012.13255

About me:

Follow me on LinkedIn: / csalexiuk

Check out what I'm working on: https://getox.ai/

Recommended

![[1hr Talk] Intro to Large Language Models](https://i.ytimg.com/vi/zjkBMFhNj_g/mqdefault.jpg)

![The moment we stopped understanding AI [AlexNet]](https://i.ytimg.com/vi/UZDiGooFs54/mqdefault.jpg)

![BERT explained: Training, Inference, BERT vs GPT/LLamA, Fine tuning, [CLS] token](https://i.ytimg.com/vi/90mGPxR2GgY/mqdefault.jpg)