Stanford CS224N NLP with Deep Learning | Winter 2021 | Lecture 7 - Translation Seq2Seq Attention

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/3CnshYl

This lecture covers:

1. Introduce a new task: Machine Translation [15 mins] Machine Translation (MT) is the task of translating a sentence x from one language (the source language) to a sentence y in another language (the target language).

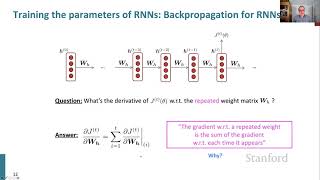

2. A new neural architecture: sequencetosequence [45 mins] Neural Machine Translation (NMT) is a way to do Machine Translation with a single endtoend neural network. The neural network architecture is called a sequencetosequence model (aka seq2seq) and it involves two RNNs.

3. A new neural technique: attention [20 mins] Attention provides a solution to the bottleneck problem.Core idea: on each step of the decoder, use direct connection to the encoder to focus

on a particular part of the source sequence.

To learn more about this course visit: https://online.stanford.edu/courses/c...

To follow along with the course schedule and syllabus visit: http://web.stanford.edu/class/cs224n/

Professor Christopher Manning

Thomas M. Siebel Professor in Machine Learning, Professor of Linguistics and of Computer Science

Director, Stanford Artificial Intelligence Laboratory (SAIL)

#naturallanguageprocessing #deeplearning